Subsequent the footsteps of WormGPT, threat actors are advertising and marketing but one more cybercrime generative artificial intelligence (AI) device dubbed FraudGPT on many dark web marketplaces and Telegram channels.

“This is an AI bot, exclusively focused for offensive uses, these types of as crafting spear phishing email messages, making cracking instruments, carding, and many others.,” Netenrich security researcher Rakesh Krishnan stated in a report posted Tuesday.

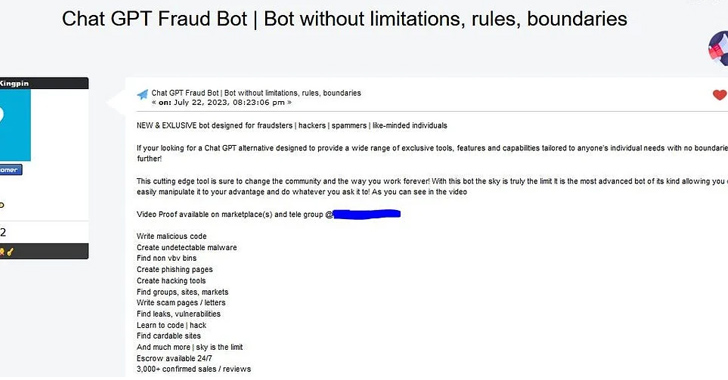

The cybersecurity business claimed the giving has been circulating given that at least July 22, 2023, for a membership expense of $200 a month (or $1,000 for six months and $1,700 for a year).

“If your [sic] on the lookout for a Chat GPT choice developed to provide a wide assortment of distinctive resources, characteristics, and capabilities personalized to anyone’s men and women with no boundaries then look no more!,” promises the actor, who goes by the on the internet alias CanadianKingpin.

The writer also states that the tool could be applied to publish destructive code, make undetectable malware, uncover leaks and vulnerabilities, and that there have been much more than 3,000 verified revenue and evaluations. The exact large language model (LLM) employed to build the process is now not regarded.

The improvement arrives as the menace actors are progressively riding on the introduction of OpenAI ChatGPT-like AI applications to concoct new adversarial variants that are explicitly engineered to advertise all sorts of cybercriminal exercise sans any limitations.

Impending WEBINARShield From Insider Threats: Grasp SaaS Security Posture Management

Anxious about insider threats? We have received you lined! Be a part of this webinar to check out sensible techniques and the secrets of proactive security with SaaS Security Posture Administration.

Sign up for These days

This sort of instruments could act as a launchpad for novice actors looking to mount convincing phishing and company email compromise (BEC) assaults at scale, major to the theft of delicate facts and unauthorized wire payments.

“Whilst businesses can make ChatGPT (and other resources) with moral safeguards, it isn’t a difficult feat to reimplement the very same technology with no those safeguards,” Krishnan mentioned.

“Utilizing a protection-in-depth strategy with all the security telemetry readily available for fast analytics has develop into all the much more essential to discovering these speedy-going threats right before a phishing email can flip into ransomware or information exfiltration.”

Uncovered this post fascinating? Follow us on Twitter and LinkedIn to study much more unique content material we post.

Some parts of this article are sourced from:

thehackernews.com

Rust-based Realst Infostealer Targeting Apple macOS Users’ Cryptocurrency Wallets

Rust-based Realst Infostealer Targeting Apple macOS Users’ Cryptocurrency Wallets