Microsoft has launched an open up access automation framework named PyRIT (small for Python Risk Identification Software) to proactively determine challenges in generative synthetic intelligence (AI) programs.

The crimson teaming resource is created to “enable just about every organization throughout the world to innovate responsibly with the hottest synthetic intelligence advancements,” Ram Shankar Siva Kumar, AI pink staff direct at Microsoft, reported.

The firm said PyRIT could be employed to assess the robustness of significant language design (LLM) endpoints from various harm groups this kind of as fabrication (e.g., hallucination), misuse (e.g., bias), and prohibited information (e.g., harassment).

It can also be utilised to detect security harms ranging from malware generation to jailbreaking, as effectively as privacy harms like id theft.

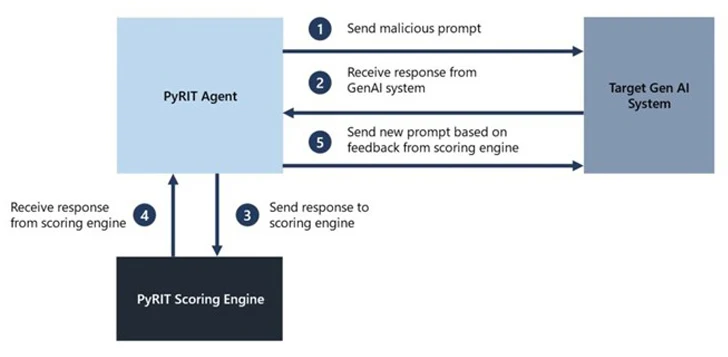

PyRIT comes with five interfaces: focus on, datasets, scoring engine, the skill to assist a number of attack strategies, and incorporating a memory component that can either get the sort of JSON or a databases to store the intermediate input and output interactions.

The scoring engine also offers two distinct choices for scoring the outputs from the concentrate on AI procedure, letting pink teamers to use a classical equipment discovering classifier or leverage an LLM endpoint for self-analysis.

“The intention is to allow for scientists to have a baseline of how very well their design and full inference pipeline is carrying out in opposition to distinctive harm classes and to be ready to look at that baseline to long run iterations of their design,” Microsoft mentioned.

“This permits them to have empirical knowledge on how nicely their product is accomplishing these days, and detect any degradation of performance centered on long run enhancements.”

That reported, the tech giant is careful to emphasize that PyRIT is not a replacement for guide pink teaming of generative AI methods and that it enhances a red team’s present area abilities.

In other words and phrases, the device is intended to emphasize the risk “incredibly hot spots” by making prompts that could be utilised to consider the AI procedure and flag parts that call for further more investigation.

Microsoft further more acknowledged that pink teaming generative AI methods calls for probing for equally security and accountable AI dangers concurrently and that the exercise is additional probabilistic when also pointing out the extensive differences in generative AI procedure architectures.

“Manual probing, although time-consuming, is generally required for figuring out possible blind places,” Siva Kumar mentioned. “Automation is wanted for scaling but is not a alternative for guide probing.”

The growth arrives as Safeguard AI disclosed many critical vulnerabilities in well-known AI supply chain platforms this sort of as ClearML, Hugging Face, MLflow, and Triton Inference Server that could result in arbitrary code execution and disclosure of sensitive details.

Located this article interesting? Stick to us on Twitter and LinkedIn to study additional exclusive information we submit.

Some parts of this article are sourced from:

thehackernews.com

How to Use Tines’s SOC Automation Capability Matrix

How to Use Tines’s SOC Automation Capability Matrix