Ambitious Workforce Tout New AI Applications, Ignore Serious SaaS Security Threats

Like the SaaS shadow IT of the past, AI is putting CISOs and cybersecurity groups in a difficult but acquainted spot.

Personnel are covertly making use of AI with little regard for proven IT and cybersecurity evaluate strategies. Considering ChatGPT’s meteoric rise to 100 million buyers inside of 60 days of start, specially with minimal profits and marketing fanfare, staff-pushed demand from customers for AI equipment will only escalate.

As new reports display some employees increase productivity by 40% employing generative AI, the tension for CISOs and their teams to fastrack AI adoption — and change a blind eye to unsanctioned AI instrument use — is intensifying.

But succumbing to these pressures can introduce serious SaaS details leakage and breach threats, especially as staff flock to AI tools created by tiny businesses, solopreneurs, and indie builders.

AI Security GuideDownload AppOmni’s CISO Tutorial to AI Security – Part 1

AI evokes inspiration, confusion, and skepticism — primarily amongst CISOs. AppOmni’s most recent CISO Information examines popular misconceptions about AI security, providing you a well balanced standpoint on today’s most polarizing IT subject matter.

Get It Now

Indie AI Startups Ordinarily Lack the Security Rigor of Business AI

Indie AI applications now selection in the tens of hundreds, and they’re successfully luring workers with their freemium models and merchandise-led expansion marketing and advertising system. In accordance to foremost offensive security engineer and AI researcher Joseph Thacker, indie AI app builders hire a lot less security staff members and security concentration, a lot less authorized oversight, and significantly less compliance.

Thacker breaks down indie AI device threats into the next types:

- Knowledge leakage: AI instruments, specifically generative AI using huge language models (LLMs), have broad accessibility to the prompts employees enter. Even ChatGPT chat histories have been leaked, and most indie AI instruments usually are not running with the security benchmarks that OpenAI (the parent business of ChatGPT) use. Virtually every single indie AI device retains prompts for “education information or debugging reasons,” leaving that details vulnerable to publicity.

- Content excellent issues: LLMs are suspect to hallucinations, which IBM defines as the phenomena when LLMS “perceives patterns or objects that are nonexistent or imperceptible to human observers, building outputs that are nonsensical or entirely inaccurate.” If your organization hopes to depend on an LLM for information technology or optimization without the need of human testimonials and reality-examining protocols in location, the odds of inaccurate data staying published are superior. Over and above information generation accuracy pitfalls, a developing number of groups these kinds of as teachers and science journal editors have voiced moral problems about disclosing AI authorship.

- Products vulnerabilities: In standard, the more compact the firm setting up the AI resource, the extra most likely the developers will fail to tackle frequent solution vulnerabilities. For example, indie AI equipment can be additional prone to prompt injection, and standard vulnerabilities these as SSRF, IDOR, and XSS.

- Compliance risk: Indie AI’s absence of experienced privateness insurance policies and inside restrictions can direct to rigid fines and penalties for non-compliance issues. Employers in industries or geographies with tighter SaaS facts restrictions these as SOX, ISO 27001, NIST CSF, NIST 800-53, and APRA CPS 234 could come across on their own in violation when workers use equipment that don’t abide by these benchmarks. Also, numerous indie AI suppliers have not obtained SOC 2 compliance.

In small, indie AI suppliers are usually not adhering to the frameworks and protocols that preserve critical SaaS details and methods safe. These pitfalls grow to be amplified when AI applications are related to business SaaS techniques.

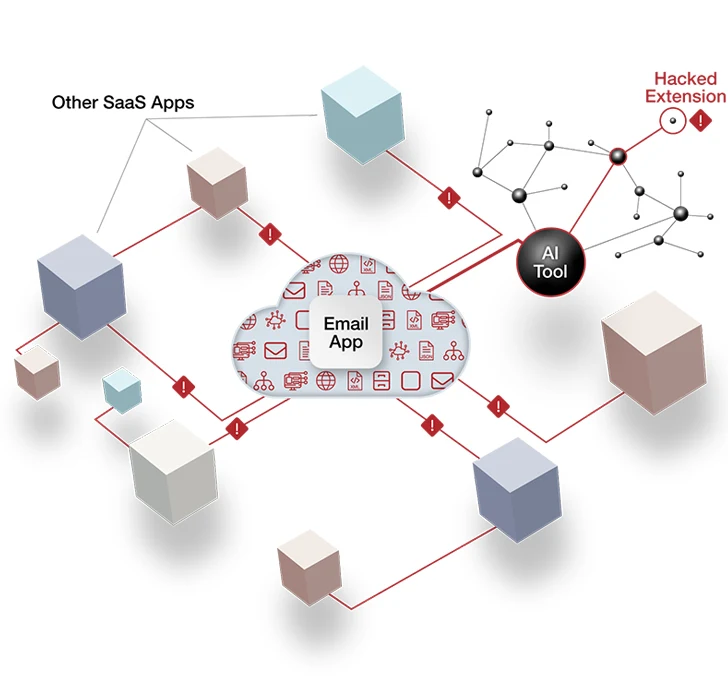

Connecting Indie AI to Company SaaS Apps Boosts Productiveness — and the Likelihood of Backdoor Attacks

Workers reach (or perceive) sizeable process enhancement and outputs with AI instruments. But quickly, they’re going to want to turbocharge their productivity gains by connecting AI to the SaaS techniques they use each individual working day, these as Google Workspace, Salesforce, or M365.

For the reason that indie AI tools count on progress by word of mouth more than classic promoting and gross sales ways, indie AI sellers persuade these connections inside of the goods and make the course of action somewhat seamless. A Hacker News article on generative AI security challenges illustrates this level with an illustration of an personnel who finds an AI scheduling assistant to support take care of time far better by checking and examining the employee’s process management and meetings. But the AI scheduling assistant will have to connect to applications like Slack, corporate Gmail, and Google Generate to acquire the details it really is developed to examine.

Since AI tools mostly count on OAuth access tokens to forge an AI-to-SaaS connection, the AI scheduling assistant is granted ongoing API-primarily based communication with Slack, Gmail, and Google Generate.

Workforce make AI-to-SaaS connections like this each individual working day with tiny issue. They see the doable advantages, not the inherent pitfalls. But properly-intentioned personnel really don’t comprehend they could have related a 2nd-price AI software to your organization’s very delicate facts.

Determine 1: How an indie AI instrument achieves an OAuth token relationship with a key SaaS system. Credit: AppOmni

Determine 1: How an indie AI instrument achieves an OAuth token relationship with a key SaaS system. Credit: AppOmni

AI-to-SaaS connections, like all SaaS-to-SaaS connections, will inherit the user’s permission settings. This translates to a serious security risk as most indie AI resources observe lax security benchmarks. Risk actors focus on indie AI applications as the suggests to entry the connected SaaS devices that include the firm’s crown jewels.

Once the menace actor has capitalized on this backdoor to your organization’s SaaS estate, they can access and exfiltrate knowledge right until their action is observed. Unfortunately, suspicious exercise like this often flies beneath the radar for months or even decades. For instance, roughly two weeks passed amongst the knowledge exfiltration and community discover of the January 2023 CircleCI info breach.

Devoid of the right SaaS security posture management (SSPM) tooling to observe for unauthorized AI-to-SaaS connections and detect threats like big numbers of file downloads, your firm sits at a heightened risk for SaaS knowledge breaches. SSPM mitigates this risk substantially and constitutes a important element of your SaaS security software. But it is really not meant to swap assessment techniques and protocols.

How to Pretty much Cut down Indie AI Device Security Hazards

Acquiring explored the threats of indie AI, Thacker suggests CISOs and cybersecurity teams concentration on the fundamentals to prepare their group for AI equipment:

1. Really don’t Neglect Normal Due Diligence

We commence with the essentials for a reason. Make sure someone on your team, or a member of Authorized, reads the phrases of products and services for any AI equipment that workers ask for. Of study course, this isn’t always a safeguard towards details breaches or leaks, and indie suppliers might extend the fact in hopes of placating enterprise clients. But completely understanding the conditions will tell your authorized approach if AI suppliers crack assistance phrases.

2. Consider Applying (Or Revising) Application And Data Procedures

An software policy supplies apparent pointers and transparency to your firm. A simple “permit-record” can protect AI instruments crafted by enterprise SaaS vendors, and nearly anything not included falls into the “disallowed” camp. Alternatively, you can build a facts policy that dictates what kinds of info workers can feed into AI instruments. For illustration, you can forbid inputting any type of intellectual house into AI applications, or sharing details between your SaaS techniques and AI apps.

3. Commit To Regular Personnel Coaching And Education and learning

Handful of workers seek indie AI applications with destructive intent. The huge the vast majority are merely unaware of the threat they’re exposing your corporation to when they use unsanctioned AI.

Provide frequent teaching so they comprehend the truth of AI applications facts leaks, breaches, and what AI-to-SaaS connections entail. Trainings also provide as opportune times to reveal and fortify your procedures and computer software evaluation system.

4. Question The Critical Questions In Your Seller Assessments

As your workforce conducts seller assessments of indie AI instruments, insist on the exact rigor you use to company organizations below evaluate. This system have to include their security posture and compliance with info privateness rules. Amongst the staff requesting the tool and the vendor itself, handle concerns such as:

- Who will access the AI instrument? Is it minimal to selected people today or teams? Will contractors, partners, and/or shoppers have entry?

- What persons and firms have obtain to prompts submitted to the resource? Does the AI element depend on a third get together, a model supplier, or a area product?

- Does the AI resource consume or in any way use exterior input? What would transpire if prompt injection payloads were inserted into them? What influence could that have?

- Can the software just take consequential actions, this sort of as adjustments to data files, customers, or other objects?

- Does the AI software have any capabilities with the probable for standard vulnerabilities to happen (this sort of as SSRF, IDOR, and XSS mentioned higher than)? For illustration, is the prompt or output rendered wherever XSS may well be achievable? Does web fetching operation permit hitting inside hosts or cloud metadata IP?

AppOmni, a SaaS security vendor, has released a sequence of CISO Guides to AI Security that give more detailed seller evaluation queries along with insights into the chances and threats AI resources existing.

5. Make Interactions and Make Your Team (and Your Procedures) Obtainable

CISOs, security groups, and other guardians of AI and SaaS security need to current themselves as companions in navigating AI to enterprise leaders and their teams. The ideas of how CISOs make security a organization priority break down to strong relationships, conversation, and available recommendations.

Demonstrating the effect of AI-relevant data leaks and breaches in conditions of bucks and alternatives missing tends to make cyber challenges resonate with business enterprise teams. This improved communication is critical, but it’s only a single stage. You might also have to have to modify how your staff performs with the small business.

Irrespective of whether you opt for software or knowledge permit lists — or a mix of equally — ensure these guidelines are plainly prepared and easily accessible (and promoted). When staff members know what data is allowed into an LLM, or which authorised suppliers they can choose for AI resources, your crew is much far more probably to be considered as empowering, not halting, progress. If leaders or workers ask for AI instruments that tumble out of bounds, commence the dialogue with what they’re striving to execute and their objectives. When they see you happen to be fascinated in their point of view and wants, they are more eager to husband or wife with you on the correct AI device than go rogue with an indie AI vendor.

The greatest odds for trying to keep your SaaS stack protected from AI equipment about the prolonged expression is generating an natural environment in which the business sees your team as a useful resource, not a roadblock.

Discovered this short article attention-grabbing? Adhere to us on Twitter and LinkedIn to go through much more exclusive content we put up.

Some parts of this article are sourced from:

thehackernews.com

ClearFake Campaign Expands to Deliver Atomic Stealer on Macs Systems

ClearFake Campaign Expands to Deliver Atomic Stealer on Macs Systems