Google is urging third-celebration Android application builders to integrate generative synthetic intelligence (GenAI) functions in a liable manner.

The new steerage from the look for and advertising and marketing huge is an effort and hard work to overcome problematic articles, together with sexual information and loathe speech, made through these types of equipment.

To that close, apps that generate content applying AI need to ensure they don’t develop Limited Articles, have a system for users to report or flag offensive info, and market them in a manner that correctly represents the app’s capabilities. App developers are also staying encouraged to rigorously exam their AI types to assure they respect person basic safety and privateness.

“Be confident to examination your apps across numerous consumer situations and safeguard them from prompts that could manipulate your generative AI attribute to build hazardous or offensive written content,” Prabhat Sharma, director of have confidence in and basic safety for Google Engage in, Android, and Chrome, claimed.

The development arrives as a the latest investigation from 404 Media discovered many applications on the Apple Application Retailer and Google Participate in Keep that marketed the means to develop non-consensual nude photographs.

Meta’s Use of Community Information for AI Sparks Problems

The immediate adoption of AI technologies in new many years has also led to broader privateness and security issues relevant to instruction facts and model safety, providing destructive actors with a way to extract sensitive data and tamper with the fundamental versions to return sudden results.

What is actually additional, Meta’s choice to use community facts accessible across its goods and companies to assistance strengthen its AI choices and have the “world’s very best suggestion technology” has prompted Austrian privacy outfit noyb to file a complaint in 11 European international locations alleging violation of GDPR privacy legislation in the region.

“This information contains factors like public posts or public images and their captions,” the firm declared late last month. “In the potential, we may possibly also use the information people today share when interacting with our generative AI options, like Meta AI, or with a business, to create and make improvements to our AI solutions.”

Precisely, noyb has accused Meta of shifting the stress on customers (i.e., making it opt-out as opposed to choose-in) and failing to present satisfactory details on how the corporation is organizing to use the purchaser information.

Meta, for its element, has famous that it will be “relying on the authorized basis of ‘Legitimate Interests’ for processing particular initially and 3rd-celebration details in the European Location and the United Kingdom to enhance AI and develop greater ordeals. E.U. people have until finally June 26 to choose out of the processing, which they can do by submitting a request.

Whilst the tech big manufactured it a issue to spell out that the strategy is aligned with how other tech businesses are acquiring and improving upon their AI ordeals in Europe, the Norwegian knowledge protection authority Datatilsynet stated it is really “uncertain” about the legality of the approach.

“In our see, the most all-natural matter would have been to ask people for consent just before their posts and pics are employed in this way,” the company reported in a assertion.

“The European Court of Justice has previously built it clear that Meta has no ‘legitimate interest’ to override users’ correct to information defense when it comes to advertising and marketing,” noyb’s Max Schrems claimed. “Still the enterprise is trying to use the same arguments for the instruction of undefined ‘AI technology.'”

Microsoft’s Recall Faces Much more Scrutiny

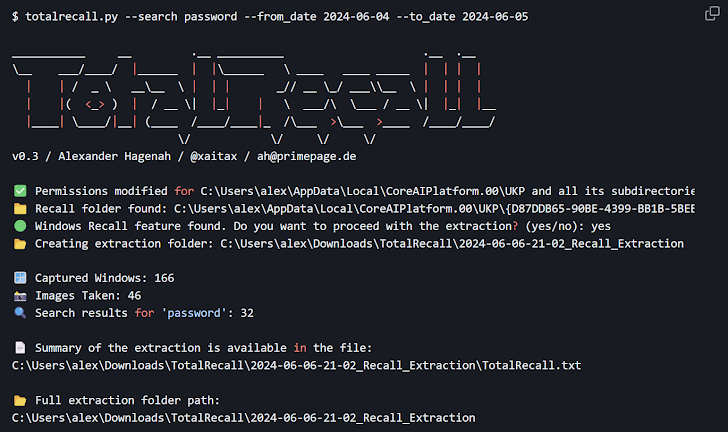

Meta’s most up-to-date regulatory kerfuffle also arrives at a time when Microsoft’s personal AI-run element known as Remember has received swift backlash owing to privacy and security dangers that could occur as a end result of capturing screenshots of users’ routines on their Windows PCs each and every 5 seconds and turning them into a searchable archive.

Security researcher Kevin Beaumont, in a new evaluation, uncovered that it can be possible for a malicious actor to deploy an facts stealer and exfiltrate the database that stores the information parsed from the screenshots. The only prerequisite to pulling this off is that accessing the knowledge involves administrator privileges on a user’s device.

“Recall enables menace actors to automate scraping all the things you have at any time looked at within seconds,” Beaumont said. “[Microsoft] should recall Remember and rework it to be the feature it warrants to be, shipped at a later on date.”

Other scientists have likewise shown resources like TotalRecall that make Recall ripe for abuse and extract very delicate information and facts from the databases. “Windows Recall shops almost everything domestically in an unencrypted SQLite databases, and the screenshots are merely saved in a folder on your Computer system,” Alexander Hagenah, who developed TotalRecall, mentioned.

As of June 6, 2024, TotalRecall has been up to date to no for a longer period have to have admin legal rights, utilizing one of the two approaches security researcher James Forshaw outlined to bypass the administrator privilege requirement in purchase to entry the Remember knowledge.

“It is only safeguarded as a result of getting [access control list]’ed to Technique and so any privilege escalation (or non-security boundary *cough*) is sufficient to leak the data,” Forshaw stated.

The 1st procedure involves impersonating a software termed AIXHost.exe by obtaining its token, or, even greater, getting advantage of the recent user’s privileges to modify the accessibility control lists and attain entry to the whole database.

That reported, it truly is worth pointing out that Recall is at the moment in preview and Microsoft can still make alterations to the software ahead of it gets broadly offered to all consumers later on this month. It’s envisioned to be enabled by default for appropriate Copilot+ PCs.

Observed this report interesting? Observe us on Twitter and LinkedIn to browse far more distinctive articles we write-up.

Some parts of this article are sourced from:

thehackernews.com

FBI Distributes 7,000 LockBit Ransomware Decryption Keys to Help Victims

FBI Distributes 7,000 LockBit Ransomware Decryption Keys to Help Victims