2023 has viewed its fair share of cyber attacks, nevertheless there is certainly a person attack vector that proves to be much more distinguished than many others – non-human entry. With 11 significant-profile attacks in 13 months and an at any time-expanding ungoverned attack surface, non-human identities are the new perimeter, and 2023 is only the beginning.

Why non-human access is a cybercriminal’s paradise

People often seem for the best way to get what they want, and this goes for cybercrime as well. Risk actors glimpse for the route of the very least resistance, and it appears to be that in 2023 this path was non-person accessibility credentials (API keys, tokens, support accounts and tricks).

“50% of the lively obtain tokens connecting Salesforce and third-bash apps are unused. In GitHub and GCP the numbers attain 33%.”

These non-consumer entry credentials are utilized to join apps and means to other cloud solutions. What can make them a legitimate hacker’s aspiration is that they have no security actions like person qualifications do (MFA, SSO or other IAM procedures), they are mostly in excess of-permissive, ungoverned, and never ever-revoked. In point, 50% of the active accessibility tokens connecting Salesforce and 3rd-get together apps are unused. In GitHub and GCP the quantities achieve 33%.*

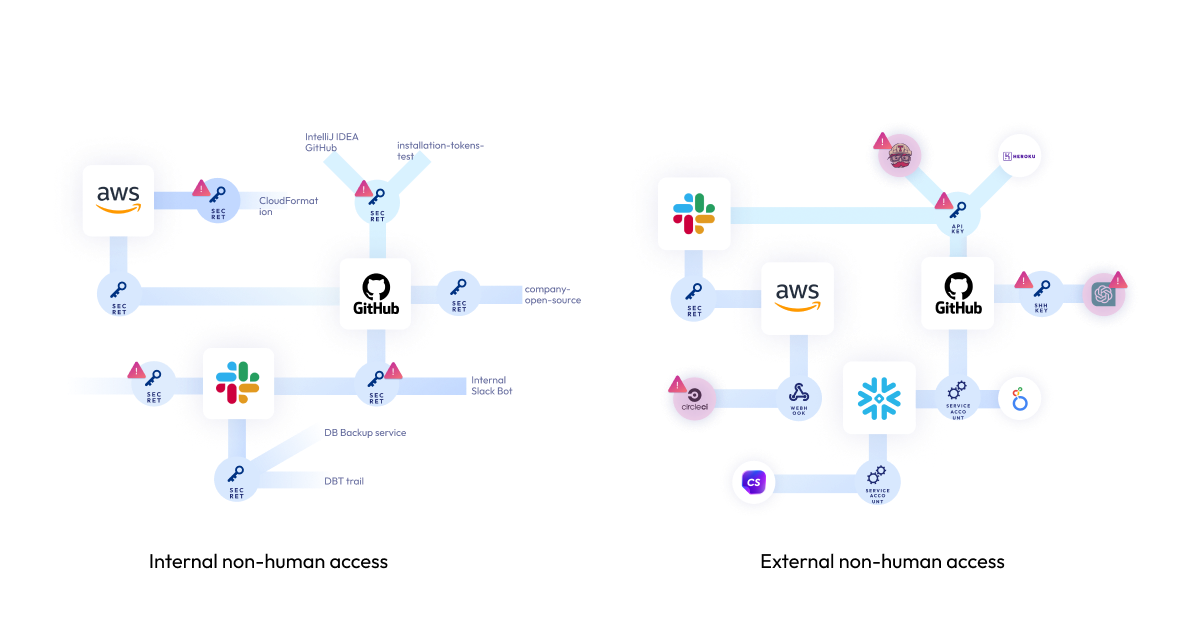

So how do cybercriminals exploit these non-human obtain qualifications? To realize the attack paths, we need to initially recognize the forms of non-human accessibility and identities. Normally, there are two styles of non-human access – exterior and internal.

External non-human entry is designed by workers connecting 3rd-celebration resources and solutions to main company & engineering environments like Salesforce, Microsoft365, Slack, GitHub and AWS – to streamline procedures and raise agility. These connections are carried out as a result of API keys, services accounts, OAuth tokens and webhooks, that are owned by the 3rd-occasion application or service (the non-human identification). With the escalating pattern of base-up software package adoption and freemium cloud products and services, lots of of these connections are routinely created by distinct employees without having any security governance and, even worse, from unvetted sources. Astrix study displays that 90% of the applications related to Google Workspace environments are non-marketplace apps – which means they had been not vetted by an formal app retail outlet. In Slack, the numbers attain 77%, although in Github they arrive at 50%.*

“74% of Own Accessibility Tokens in GitHub environments have no expiration.”

Inner non-human obtain is comparable, nevertheless, it is designed with internal accessibility qualifications – also recognized as ‘secrets’. R&D groups frequently generate tricks that link different assets and services. These secrets and techniques are usually scattered throughout multiple magic formula managers (vaults), without any visibility for the security team of in which they are, if they are exposed, what they allow for entry to, and if they are misconfigured. In simple fact, 74% of Personal Access Tokens in GitHub environments have no expiration. Similarly, 59% of the webhooks in GitHub are misconfigured – meaning they are unencrypted and unassigned.*

Routine a live demo of Astrix – a leader in non-human identity security

2023’s significant-profile attacks exploiting non-human entry

This threat is something but theoretical. 2023 has observed some significant manufacturers falling target to non-human entry exploits, with hundreds of buyers affected. In such attacks, attackers get benefit of exposed or stolen obtain qualifications to penetrate organizations’ most delicate core techniques, and in the situation of external entry – reach their customers’ environments (supply chain assaults). Some of these superior-profile assaults include things like:

- Okta (October 2023): Attackers made use of a leaked support account to access Okta’s guidance scenario administration process. This permitted the attackers to view data files uploaded by a range of Okta clients as element of recent assist conditions.

- GitHub Dependabot (September 2023): Hackers stole GitHub Personalized Accessibility Tokens (PAT). These tokens were being then used to make unauthorized commits as Dependabot to both of those public and personal GitHub repositories.

- Microsoft SAS Important (September 2023): A SAS token that was published by Microsoft’s AI scientists truly granted whole access to the total Storage account it was developed on, foremost to a leak of in excess of 38TB of very sensitive facts. These permissions have been offered for attackers above the class of more than 2 a long time (!).

- Slack GitHub Repositories (January 2023): Danger actors obtained obtain to Slack’s externally hosted GitHub repositories by using a “confined” selection of stolen Slack worker tokens. From there, they had been ready to down load personal code repositories.

- CircleCI (January 2023): An engineering employee’s computer was compromised by malware that bypassed their antivirus answer. The compromised machine permitted the risk actors to accessibility and steal session tokens. Stolen session tokens give risk actors the similar entry as the account owner, even when the accounts are guarded with two-aspect authentication.

The effect of GenAI accessibility

“32% of GenAI apps connected to Google Workspace environments have extremely broad entry permissions (read through, generate, delete).”

As one may well expect, the wide adoption of GenAI instruments and companies exacerbates the non-human access issue. GenAI has gained monumental level of popularity in 2023, and it is probably to only develop. With ChatGPT getting to be the quickest expanding app in record, and AI-driven apps staying downloaded 1506% more than past calendar year, the security hazards of applying and connecting frequently unvetted GenAI apps to enterprise main methods is previously creating sleepless evenings for security leaders. The figures from Astrix Analysis offer another testomony to this attack area: 32% of GenAI apps related to Google Workspace environments have extremely large access permissions (go through, compose, delete).*

The dangers of GenAI access are hitting waves market wide. In a the latest report named “Rising Tech: Best 4 Security Dangers of GenAI”, Gartner describes the hazards that occur with the common use of GenAI equipment and systems. According to the report, “The use of generative AI (GenAI) big language models (LLMs) and chat interfaces, especially related to third-celebration remedies exterior the corporation firewall, symbolize a widening of attack surfaces and security threats to enterprises.”

Security has to be an enabler

Due to the fact non-human accessibility is the immediate outcome of cloud adoption and automation – each welcomed trends contributing to growth and effectiveness, security need to help it. With security leaders consistently striving to be enablers relatively than blockers, an solution for securing non-human identities and their obtain qualifications is no extended an choice.

Improperly secured non-human access, both exterior and interior, massively increases the chance of source chain attacks, facts breaches, and compliance violations. Security policies, as perfectly as computerized tools to implement them, are a must for these who appear to protected this unstable attack floor while allowing for the business enterprise to reap the positive aspects of automation and hyper-connectivity.

Schedule a are living demo of Astrix – a chief in non-human identification security

*In accordance to Astrix Investigate data, collected from company environments of corporations with 1000-10,000 workforce

Located this article intriguing? Adhere to us on Twitter and LinkedIn to read a lot more special information we submit.

Some parts of this article are sourced from:

thehackernews.com

New MrAnon Stealer Malware Targeting German Users via Booking-Themed Scam

New MrAnon Stealer Malware Targeting German Users via Booking-Themed Scam