The U.S. National Institute of Standards and Technology (NIST) is contacting consideration to the privacy and security worries that come up as a consequence of improved deployment of synthetic intelligence (AI) techniques in recent many years.

“These security and privateness troubles consist of the potential for adversarial manipulation of schooling data, adversarial exploitation of design vulnerabilities to adversely have an effect on the general performance of the AI program, and even destructive manipulations, modifications or mere interaction with designs to exfiltrate sensitive information about people represented in the details, about the product alone, or proprietary organization knowledge,” NIST stated.

As AI methods grow to be built-in into on the internet products and services at a quick rate, in component driven by the emergence of generative AI techniques like OpenAI ChatGPT and Google Bard, products powering these technologies facial area a amount of threats at various stages of the machine finding out operations.

These involve corrupted instruction knowledge, security flaws in the software package parts, details model poisoning, provide chain weaknesses, and privateness breaches arising as a end result of prompt injection assaults.

“For the most aspect, software program builders have to have additional people to use their product or service so it can get greater with publicity,” NIST computer scientist Apostol Vassilev explained. “But there is no assurance the exposure will be superior. A chatbot can spew out poor or toxic data when prompted with thoroughly intended language.”

The assaults, which can have important impacts on availability, integrity, and privacy, are broadly categorised as follows –

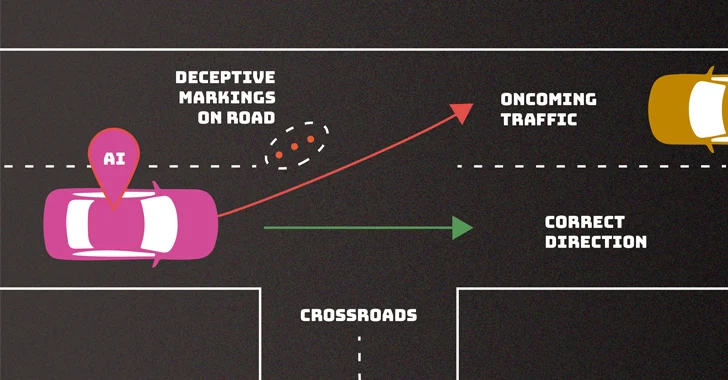

- Evasion assaults, which purpose to generate adversarial output after a design is deployed

- Poisoning assaults, which target the education section of the algorithm by introducing corrupted data

- Privacy assaults, which purpose to glean delicate information about the program or the information it was skilled on by posing questions that circumvent existing guardrails

- Abuse attacks, which intention to compromise reputable resources of info, this sort of as a web web site with incorrect items of information and facts, to repurpose the system’s meant use

Such attacks, NIST claimed, can be carried out by threat actors with total awareness (white-box), minimal know-how (black-box), or have a partial understanding of some of the factors of the AI method (grey-box).

The company more noted the lack of robust mitigation actions to counter these hazards, urging the broader tech group to “arrive up with superior defenses.”

The growth comes additional than a thirty day period right after the U.K., the U.S., and worldwide companions from 16 other nations around the world produced suggestions for the advancement of protected synthetic intelligence (AI) systems.

“Irrespective of the substantial progress AI and machine finding out have designed, these systems are susceptible to assaults that can bring about stunning failures with dire effects,” Vassilev mentioned. “There are theoretical complications with securing AI algorithms that just have not been solved nonetheless. If any individual claims otherwise, they are providing snake oil.”

Discovered this article intriguing? Observe us on Twitter and LinkedIn to read through a lot more distinctive content material we submit.

Some parts of this article are sourced from:

thehackernews.com

DoJ Charges 19 Worldwide in $68 Million xDedic Dark Web Marketplace Fraud

DoJ Charges 19 Worldwide in $68 Million xDedic Dark Web Marketplace Fraud