Microsoft Copilot has been identified as just one of the most highly effective productivity resources on the planet.

Copilot is an AI assistant that life inside of each individual of your Microsoft 365 applications — Term, Excel, PowerPoint, Teams, Outlook, and so on. Microsoft’s aspiration is to take the drudgery out of every day function and allow individuals focus on getting resourceful trouble-solvers.

What would make Copilot a unique beast than ChatGPT and other AI applications is that it has obtain to all the things you have ever worked on in 365. Copilot can immediately lookup and compile info from across your files, presentations, email, calendar, notes, and contacts.

And therein lies the trouble for facts security groups. Copilot can accessibility all the sensitive knowledge that a consumer can accessibility, which is normally considerably as well much. On normal, 10% of a company’s M365 facts is open to all workers.

Copilot can also swiftly produce net new delicate data that must be protected. Prior to the AI revolution, humans’ means to build and share information far outpaced the potential to defend it. Just glance at data breach trends. Generative AI pours kerosine on this hearth.

There is a great deal to unpack when it comes to generative AI as a total: product poisoning, hallucination, deepfakes, and so forth. In this publish, however, I’m likely to focus especially on info securityand how your staff can make sure a secure Copilot rollout.

Microsoft 365 Copilot use cases

The use scenarios of generative AI with a collaboration suite like M365 are limitless. It can be easy to see why so lots of IT and security teams are clamoring to get early obtain and planning their rollout plans. The productivity boosts will be great.

For instance, you can open a blank Phrase doc and inquire Copilot to draft a proposal for a shopper dependent on a target facts established which can contain OneNote pages, PowerPoint decks, and other place of work docs. In a issue of seconds, you have a total-blown proposal.

Listed here are a couple of additional illustrations Microsoft gave through their launch party:

- Copilot can be part of your Teams meetings and summarize in genuine time what is staying mentioned, seize action things, and convey to you which queries had been unresolved in the meeting.

- Copilot in Outlook can enable you triage your inbox, prioritize e-mail, summarize threads, and produce replies for you.

- Copilot in Excel can analyze raw facts and give you insights, tendencies, and strategies.

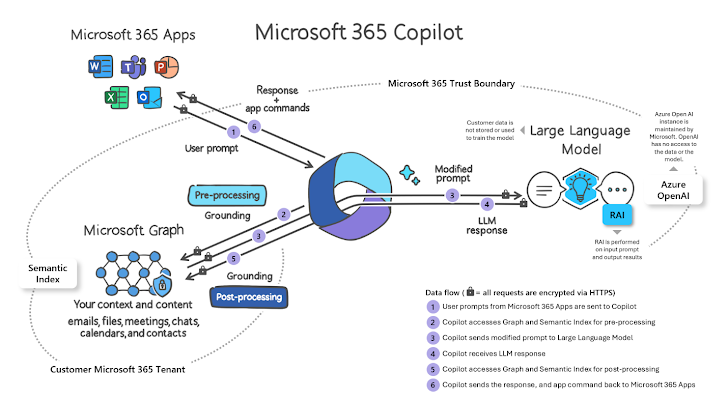

How Microsoft 365 Copilot performs

This is a simple overview of how a Copilot prompt is processed:

- A consumer inputs a prompt in an application like Phrase, Outlook, or PowerPoint.

- Microsoft gathers the user’s company context primarily based on their M365 permissions.

- Prompt is despatched to the LLM (like GPT4) to create a response.

- Microsoft performs put up-processing responsible AI checks.

- Microsoft generates a response and commands back again to the M365 app.

Graphic Supply: Microsoft

Graphic Supply: Microsoft

Microsoft 365 Copilot security product

With Microsoft, there is always an excessive rigidity in between productiveness and security.

This was on screen in the course of the coronavirus when IT teams were being swiftly deploying Microsoft Teams without first absolutely comprehending how the underlying security product worked or how in-form their organization’s M365 permissions, teams, and connection procedures were being.

The very good information:

- Tenant isolation. Copilot only uses info from the existing user’s M365 tenant. The AI software will not surface area data from other tenants that the person might be a visitor, in nor any tenants that may possibly be established up with cross-tenant sync.

- Instruction boundaries. Copilot does not use any of your business facts to teach the foundational LLMs that Copilot uses for all tenants. You should not have to get worried about your proprietary knowledge displaying up in responses to other consumers in other tenants.

The negative news:

- Permissions. Copilot surfaces all organizational info to which specific users have at minimum view permissions.

- Labels. Copilot-produced articles will not inherit the MPIP labels of the documents Copilot sourced its reaction from.

- Humans. Copilot’s responses usually are not certain to be 100% factual or secure people must acquire responsibility for examining AI-produced articles.

Let us acquire the bad news one by a person.

Permissions

Granting Copilot access to only what a person can entry would be an great plan if companies ended up able to easily implement least privilege in Microsoft 365.

Microsoft states in its Copilot data security documentation:

“It really is crucial that you are applying the authorization models offered in Microsoft 365 expert services, these as SharePoint, to help make sure the proper consumers or groups have the ideal entry to the suitable written content within your corporation.”

Supply: Info, Privateness, and Security for Microsoft 365 Copilot

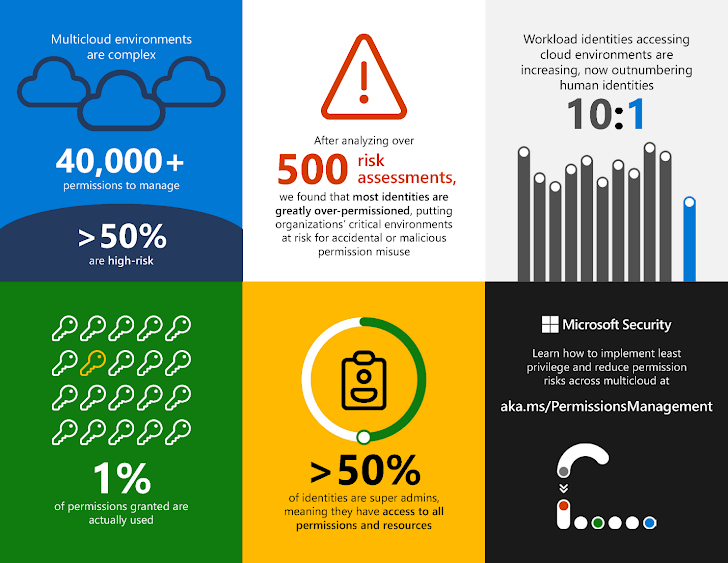

We know empirically, nevertheless, that most companies are about as significantly from least privilege as they can be. Just acquire a appear at some of the stats from Microsoft’s have State of Cloud Permissions Risk report.

This photo matches what Varonis sees when we perform countless numbers of Information Risk Assessments for organizations employing Microsoft 365 each and every year. In our report, The Wonderful SaaS Information Exposure, we found that the normal M365 tenant has:

- 40+ million unique permissions

- 113K+ sensitive information shared publicly

- 27K+ sharing back links

Why does this happen? Microsoft 365 permissions are exceptionally elaborate. Just assume about all the approaches in which a consumer can acquire entry to info:

- Immediate user permissions

- Microsoft 365 group permissions

- SharePoint local permissions (with custom ranges)

- Visitor access

- External accessibility

- General public accessibility

- Url obtain (any individual, org-broad, direct, visitor)

To make issues even worse, permissions are typically in the hands of end customers, not IT or security groups.

Labels

Microsoft depends greatly on sensitivity labels to enforce DLP procedures, use encryption, and broadly stop information leaks. In practice, having said that, receiving labels to function is tricky, primarily if you rely on humans to implement sensitivity labels.

Microsoft paints a rosy photograph of labeling and blocking as the best safety net for your facts. Fact reveals a bleaker circumstance. As human beings create knowledge, labeling usually lags guiding or becomes out-of-date.

Blocking or encrypting details can include friction to workflows, and labeling systems are minimal to specific file sorts. The more labels an corporation has, the far more complicated it can turn out to be for end users. This is in particular extreme for more substantial organizations.

The efficacy of label-centered facts safety will surely degrade when we have AI creating orders of magnitude extra information demanding correct and car-updating labels.

Are my labels alright?

Varonis can validate and improve an organization’s Microsoft sensitivity labeling by scanning, discovering, and fixing:

- Sensitive data files without having a label

- Delicate files with an incorrect label

- Non-delicate data files with a sensitive label

Human beings

AI can make human beings lazy. Material created by LLMs like GPT4 is not just fantastic, it truly is wonderful. In numerous situations, the velocity and the top quality much surpass what a human can do. As a end result, people today start off to blindly believe in AI to make safe and accurate responses.

We have presently observed actual-globe eventualities in which Copilot drafts a proposal for a consumer and consists of sensitive facts belonging to a absolutely various client. The person hits “mail” after a speedy glance (or no glance), and now you have a privateness or data breach state of affairs on your hands.

Acquiring your tenant security-all set for Copilot

It is critical to have a feeling of your data security posture ahead of your Copilot rollout. Now that Copilot is commonly out there,it is a fantastic time to get your security controls in area.

Varonis safeguards 1000’s of Microsoft 365 consumers with our Info Security System, which gives a real-time look at of risk and the means to instantly implement minimum privilege.

We can support you tackle the major security risks with Copilot with nearly no handbook effort. With Varonis for Microsoft 365, you can:

- Mechanically find and classify all sensitive AI-created written content.

- Automatically make sure that MPIP labels are accurately utilized.

- Quickly enforce the very least privilege permissions.

- Continually watch sensitive info in M365 and warn and reply to abnormal habits.

The ideal way to commence is with a absolutely free risk assessment. It can take minutes to set up and inside a day or two, you can have a authentic-time see of delicate knowledge risk.

This post initially appeared on the Varonis site.

Discovered this article appealing? Comply with us on Twitter and LinkedIn to read through a lot more distinctive content we submit.

Some parts of this article are sourced from:

thehackernews.com

15,000 Go Module Repositories on GitHub Vulnerable to Repojacking Attack

15,000 Go Module Repositories on GitHub Vulnerable to Repojacking Attack